- Enterprise IT/AV

The Way Online Video Streaming Works Has Changed

In the video ecosystem, it’s rare for competing technologies to peacefully coexist for long.

The now-famous videotape format war of the late 1970s and 1980s demonstrated the speed with which the industry could tip in favor of one technology, and the devastating impact this could have on its competition. When the industry cast its collective vote for VHS in 1975, it took less than three years for the technology to overtake Betamax. In a subsequent market shift between 1999 and 2004, VHS sales were overtaken, and then quickly dwarfed, by DVDs.

From Beta to VHS to DVD: Case studies in how suddenly the video ecosystem can tip.

Sources: Business History Review, Nexus Research.

From Beta to VHS to DVD: Case studies in how suddenly the video ecosystem can tip.

Sources: Business History Review, Nexus Research.

Online media has experienced similar seismic shifts. In January 2010, only 10% of online videos were encoded using the H.264 compression format. Less than two years later, that number had ballooned to 80% (MeFeedia).

In each of these event horizons, market inertia yielded to a media technology whose time had come. And in 2015, the industry is approaching its next point of no return.

In the last ten to twelve years, online video has become essential to how people are entertained, how students learn, and how businesses communicate. It’s estimated that more than two-thirds of global consumer internet traffic is already comprised of video (Cisco), and that large businesses already stream over 14 hours of video per employee per month (Gartner).

During this time, media distribution technology has evolved in four phases.

The Evolution of Online Media Streaming Technology

1. HTTP Download

When video files were first shared online, they were distributed using Hypertext Transfer Protocol (HTTP) — the same delivery mechanism used by HTML pages, images, documents and other types of web-based content.

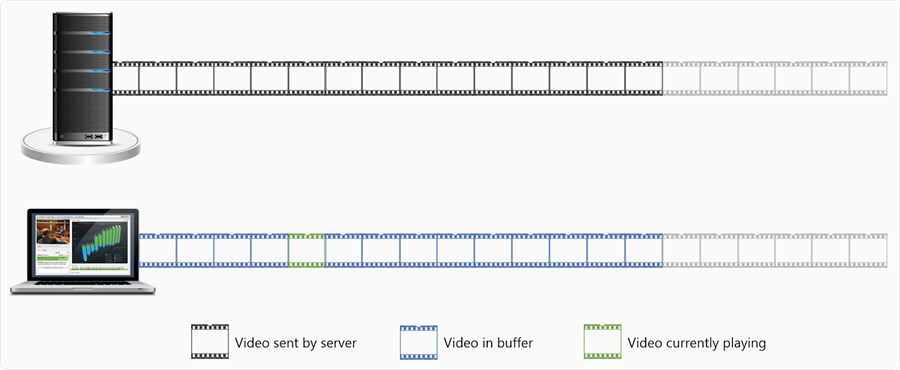

Initially, videos had to be downloaded in their entirety before playback could begin. This process, called download and play, had several notable shortcomings. First, dial-up speeds of 28 to 56 kbps meant that users would almost always encounter long playback delays. Second, there was no mechanism to efficiently scale to multiple simultaneous viewers. Finally, limited bandwidth was often wasted on unwatched segments of video. For example, if a user clicked on a 10-minute video and only watched the first three minutes, the remaining seven minutes would have been superfluously downloaded across the network.

Apple addressed some of the issues of HTTP-based video download when they released support for Fast Start, more commonly known as progressive HTTP download. This approach placed important metadata at the top of the media file, allowing video playback to begin before the entire file was downloaded. Although progressive download is still in use today, it was largely superseded in the early 2000s by custom protocols and servers built for a new type of online video delivery called streaming.

Progressive HTTP download improved startup time, but still suffered from wasted bandwidth and limited scale.

2. Custom Streaming Protocols

Compared to other types of content shared online, video files are massive. A single minute of iPhone video can take up as much as 80 to 120 MB of disk space. In that same amount of space, you could store between 250 and 350 average-sized Word documents (Microsoft).

This characteristic made it difficult to distribute video files over bandwidth-constrained networks. So as video became more prevalent on the web and in corporate networks, media companies and software vendors began developing custom protocols for video streaming. RealNetworks and Netscape collaborated on the development and standardization of the Real Time Streaming Protocol (RTSP). Adobe, through its acquisition of Macromedia, implemented the Real Time Messaging Protocol (RTMP) for Flash-based video streaming. Microsoft developed a third streaming protocol, Microsoft Media Server (MMS), for use in various Windows applications.

RTSP, RTMP, and MMS all treated video as a special case. They constructed “overlay networks” in which protocol-specific streaming servers sat alongside traditional HTTP servers. When a user initiated a request to play back video, the request was routed to the streaming server, which then opened a persistent (or “stateful”) connection to the user’s video player.

Custom streaming protocols required specialized servers, firewall configuration, and a separate caching infrastructure.

Custom streaming protocols overcame many of the challenges of HTTP progressive download. Video was buffered, processed and played back as it was being delivered over the network, enabling users to abandon a video mid-stream with minimal bandwidth waste. Random access was supported, enabling viewers to seek and quickly start playback from any point in the video. The persistent connection from streaming server to client provided more predictable latency. And in all cases, these overlay networks helped organizations offload video traffic from the primary WAN transport, reducing the chance that video congestion would jeopardize the delivery of higher-priority information and transactional data.

RTMP, RTSP, and MMS were not without limitations, however. Because these protocols treated video as a special-case data type, they increased the cost and complexity of video delivery. First, the protocols required a separate set of specialized servers to be deployed throughout the corporate network, adding hardware and software infrastructure costs. Second, streaming protocols created a binding between the delivery and caching mechanisms. This required organizations to support two separate caching technologies (one for HTTP-based traffic, one for video), effectively doubling the complexity of network management. Third, RTMP, RTSP, and MMS required administrators to open additional network ports for communication (1935, 554, and 1755 respectively). This expanded the attack surface of the network and increased the likelihood that the protocols would be blocked by corporate firewalls. Finally, custom streaming protocols were often incompatible with mobile devices. For example, RTMP required Flash for playback, a format that famously isn’t supported on iOS devices. Beyond the iOS ecosystem, mobile clients have frequent connectivity interruptions and IP address changes. This would often require the active RTMP connection to be re-established multiple times during a single event.

3. Multicast Video Streaming Technology

The claim that multicast was a distinct phase of online video delivery is a generous one, considering the technology never reached critical mass in either the enterprise or on the consumer internet. However, there was intense interest in multicast for video in the mid-2000s, and the technology persists in some corporate networks, so it bears discussion.

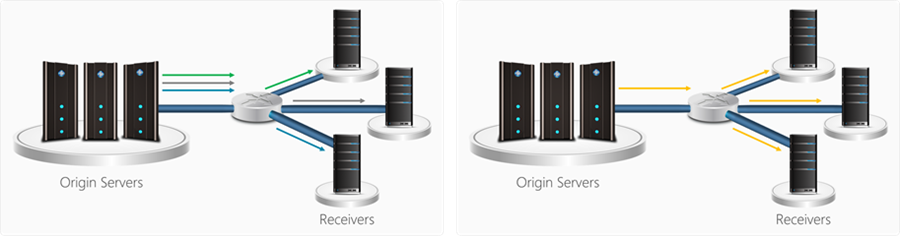

Multicast was a network technology that allowed a sender to distribute the same data to multiple recipients at the same time. Conceptually, it was not unlike listening to the radio. A single radio signal is sent to all listeners rather than unique signals being sent to each person tuning in. When implemented properly, multicast created incredible efficiencies in data delivery. This drove a period of interest in the use of multicast for video delivery.

Using multicast, an organization could theoretically deliver live video across the corporate network using a fraction of the bandwidth required by traditional unicast transmission. As a result, organizations often looked to multicast as a way get additional ROI from their bandwidth-constrained networks rather than upgrade network infrastructure.

Unicast transmission (left) sends a unique stream for each connected client, while multicast (right) sends a single stream that is shared by all subscribing clients.

Unfortunately, the infrastructure requirements of multicast made it infeasible for most organizations. In order to distribute video (or any data) using multicast, the source, recipients, and connecting network infrastructure all had to support the protocol. Specifically, every router, hub, switch, and firewall within a corporate network had to be multicast-compliant. This requirement of homogeneous infrastructure was neither practical nor resilient.

For example, if an attempted multicast communication failed, the fallback would typically have been a traditional unicast transmission. In most cases, this unicast transmission didn’t benefit from any network optimization such as data caching or other WAN acceleration techniques, since multicast was the only implemented form of optimization.

In addition, because multicast required a homogeneous networking environment, it was fundamentally at odds with the network topologies that still dominate businesses and consumer online communication. The internet is built for a wide range of network speeds, connection types, quality of service (QoS), and endpoint devices. Its foundation is HTTP—a stateless, media-independent protocol built specifically to work in this heterogeneous environment. Similarly, the networks behind corporate firewalls are increasingly heterogeneous. The trend toward bring-your-own-device (BYOD) means that employees are carrying a range of tablets and smartphones with varied capabilities and networking requirements. And ongoing industry consolidation makes it less and less likely that a newly-acquired branch office in London uses the same network architecture as the home office in Seattle.

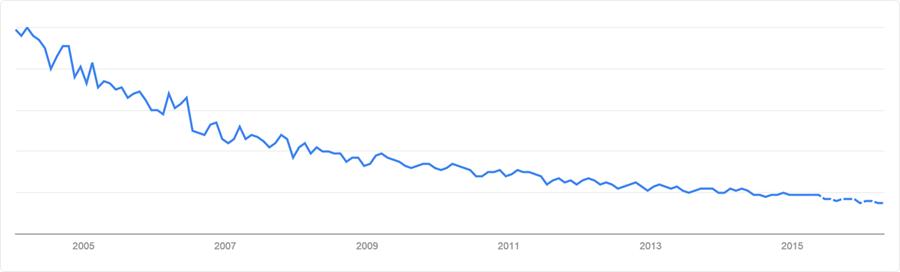

In summary, multicast was a lofty but unrealistic aspiration for video delivery. In the past decade, interest in the technology has been steadily declining.

Interest in multicast, 2004-2015. Source: Google Trends.

4. Modern HTTP Streaming

In 2008, Microsoft introduced Smooth Streaming, a hybrid approach to video delivery that offered many benefits of custom streaming protocols while leveraging HTTP and existing network infrastructure. Smooth Streaming also supported adaptive bitrate (ABR) delivery, providing viewers with faster startup and seek times, minimal buffering, and a smoother playback experience.

Adaptive bitrate streaming provides faster startup and seek times, minimal buffering, and a smooth playback experience by dynamically adjusting video quality based on client connection speed.

HTTP-based streaming quickly gained momentum, and other market leaders were quick to invest in the technology. In 2009, Apple entered the market with the introduction of HTTP Live Streaming (HLS). In 2010, Adobe shifted its focus away from custom streaming protocols with the release of HTTP Dynamic Streaming (HDS). And since 2010, major streaming and media companies, including Microsoft, Google, Adobe, Netflix, Ericsson, and Samsung, have been collaborating on MPEG-DASH, an open standard for adaptive video streaming over HTTP.

Innovations like Smooth Streaming, HLS, HDS, and DASH have driven a resurgence in HTTP-based video delivery, and an upheaval in the way that businesses distribute video over their networks.

Learn More About Online Video Streaming

![]() In our latest white paper, Modern Video Streaming in the Enterprise: Protocols, Caching, and WAN Optimization, we’ll take a deeper look into the technical shifts driving the move toward Modern Streaming, including the seven characteristics that make a video streaming protocol modern.

In our latest white paper, Modern Video Streaming in the Enterprise: Protocols, Caching, and WAN Optimization, we’ll take a deeper look into the technical shifts driving the move toward Modern Streaming, including the seven characteristics that make a video streaming protocol modern.

We’ll also look that the new opportunities Modern Streaming presents for organizations to use existing network infrastructure for more scalable, cost-effective video delivery.